In Ceph - the upgrade should set noout, nodeep-scrub and noscrub and unset when upgrade will complete · Issue #10619 · rook/rook · GitHub

Feature #40739: mgr/dashboard: Allow modifying single OSD settings for noout/noscrub/nodeepscrub - Dashboard - Ceph

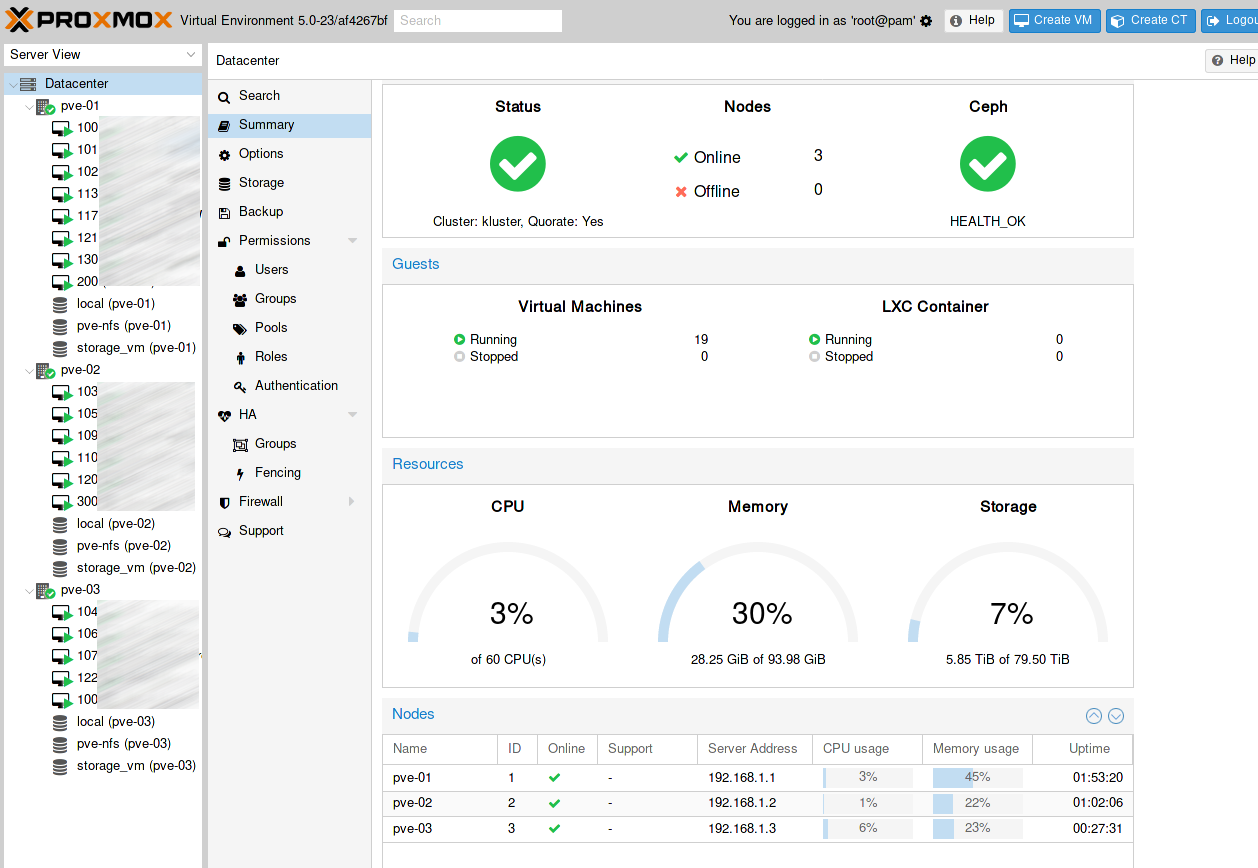

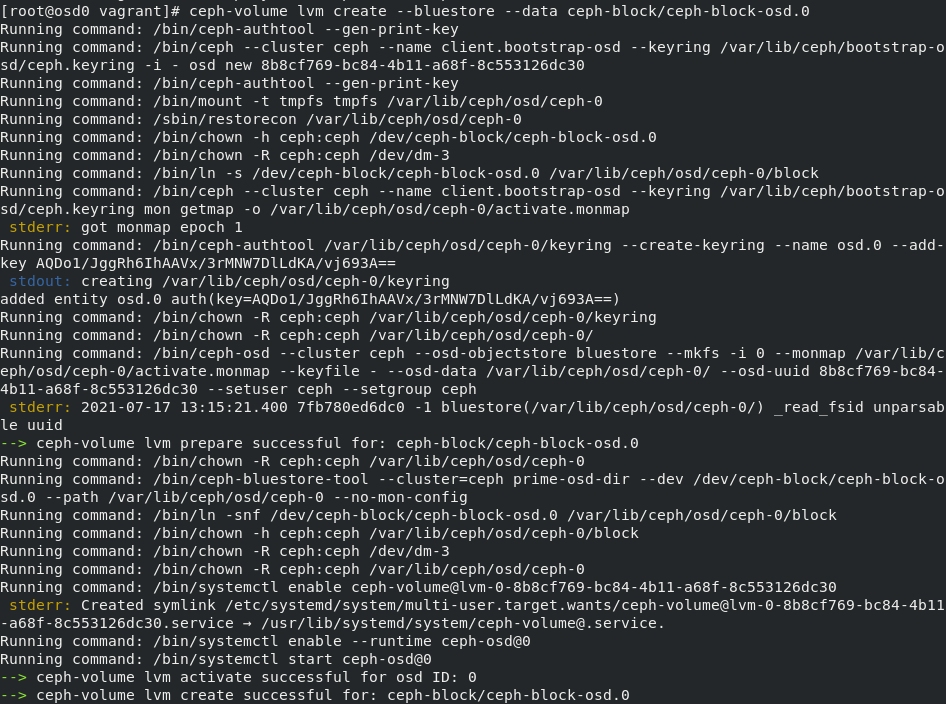

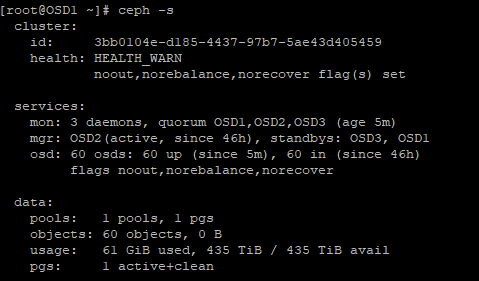

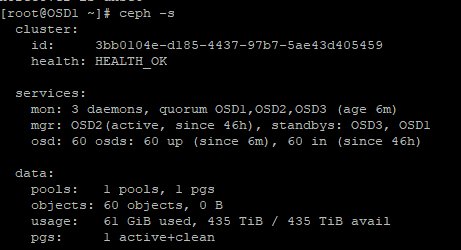

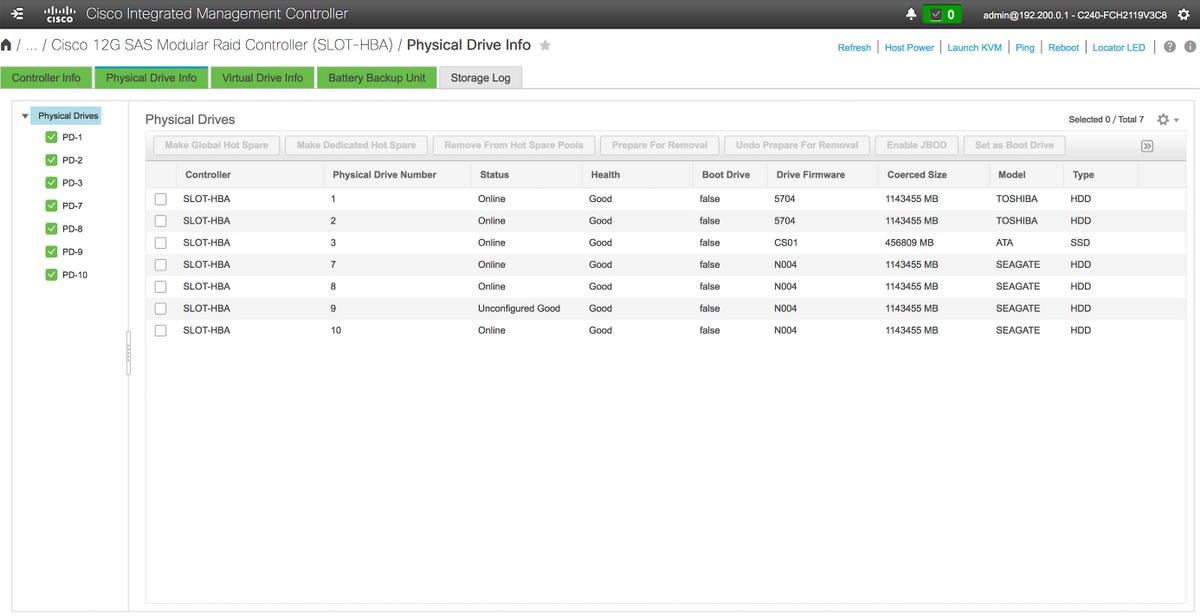

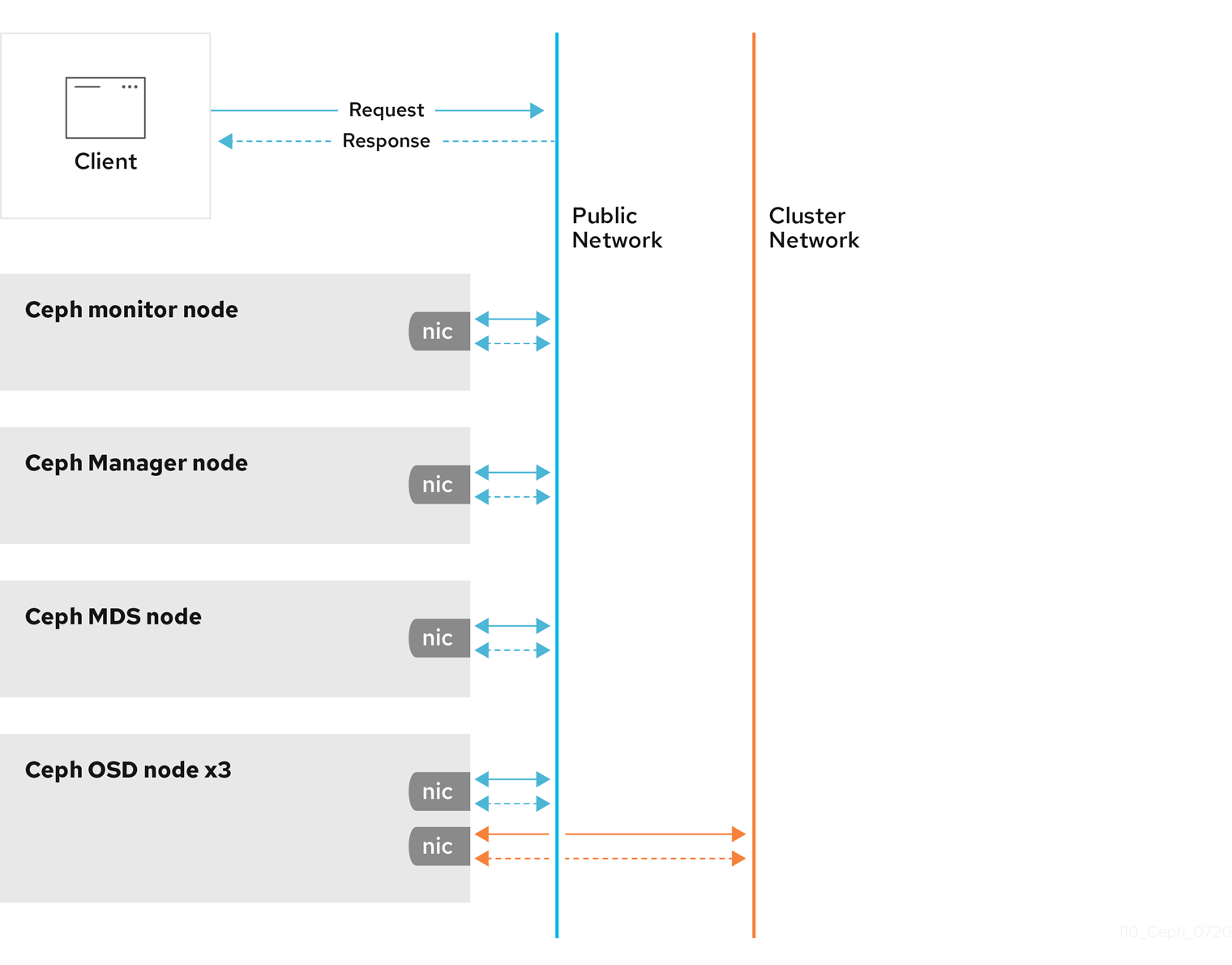

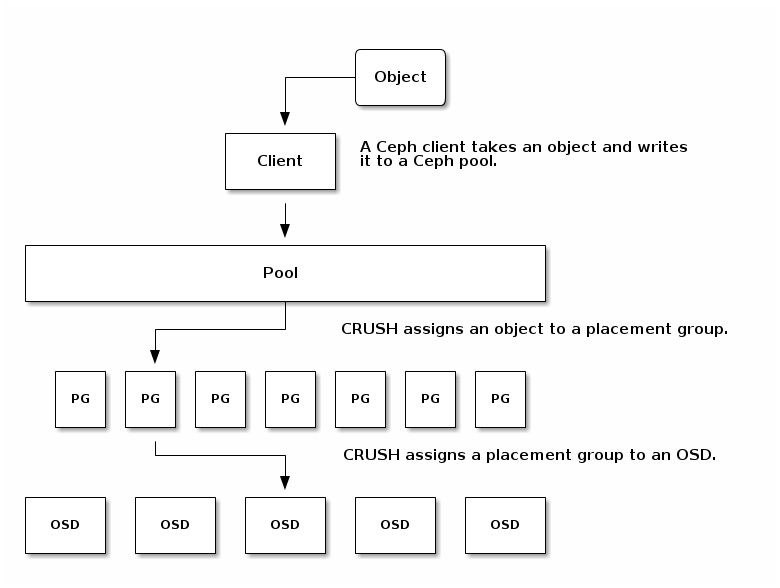

My adventures with Ceph Storage. Part 7: Add a node and expand the cluster storage - Virtual To The Core